Luminosity

Luminosity is the amount of electromagnetic radiation a body emits per unit of time.

In this quantity, all frequencies of the electromagnetic spectrum are included, which means that we need to take into account other regions apart from the visible spectrum. In astronomical settings, luminosity is a difficult quantity to measure due to:

Extinction: space is not empty. Over large distances, such as the ones between planets, stars, galaxies, etc., radiation can be lost due to absorption by obstacles like dust and gas clouds. This loss of intensity of electromagnetic radiation is known as extinction, which affects higher frequencies more prominently than lower ones.

Luminosity is measured in watts (W) and, assuming that stars emit as black bodies, depends on the surface of the body and its temperature. To assume that stars emit as black bodies means that we consider the emission and absorption properties to be perfect and that there are no losses. This assumption turns out to be very precise for stars.

Apparent magnitude

Already in the 1st century BCE, Hipparchus classified stars according to their brightness in the sky. He did so according to a scale from one for the brightest stars to six for the dimmest.

In 1865, it was established that magnitude one stars are 100 times brighter than magnitude six stars, which means that one magnitude equals a factor of 2.512 of brightness. This suggested the use of a logarithmic scale to compute the apparent brightness we measure on the earth, which can be done since it is just an artificial scale created by scientists to refer to common quantities.

Another advantage of scales is that once a fixed point (a star) is determined, we can define the rest in terms of it. Usually, the star Vega is assigned an absolute magnitude of 0 (2.512 brighter than a star of the magnitude one).

The relevance of the ‘apparent’ character comes from the fact that we are measuring brightness from the earth. This means that we are not worrying about extinction or radiation spread. We can just work with the flux of radiation per unit of area and compare our results on a logarithmic scale.

The standard formula for the apparent magnitude, with Vega being assigned a value of 0, is:

\[m = 2.5 \cdot \log_{10} \Big( \frac{F}{F_V} \Big)\]

Here, the logarithm is in base 10, m is the apparent magnitude, F is the flux of radiation received per unit of time and area of the body, and FV is the flux of radiation received per unit of time of Vega.

The use of a logarithmic scale includes the possibility of having negative magnitudes. For instance, Sirius, the brightest star in our sky, has an apparent magnitude of -1.46, which means that it’s more than 2.512 times brighter than Vega.

The benefits of a logarithmic scale

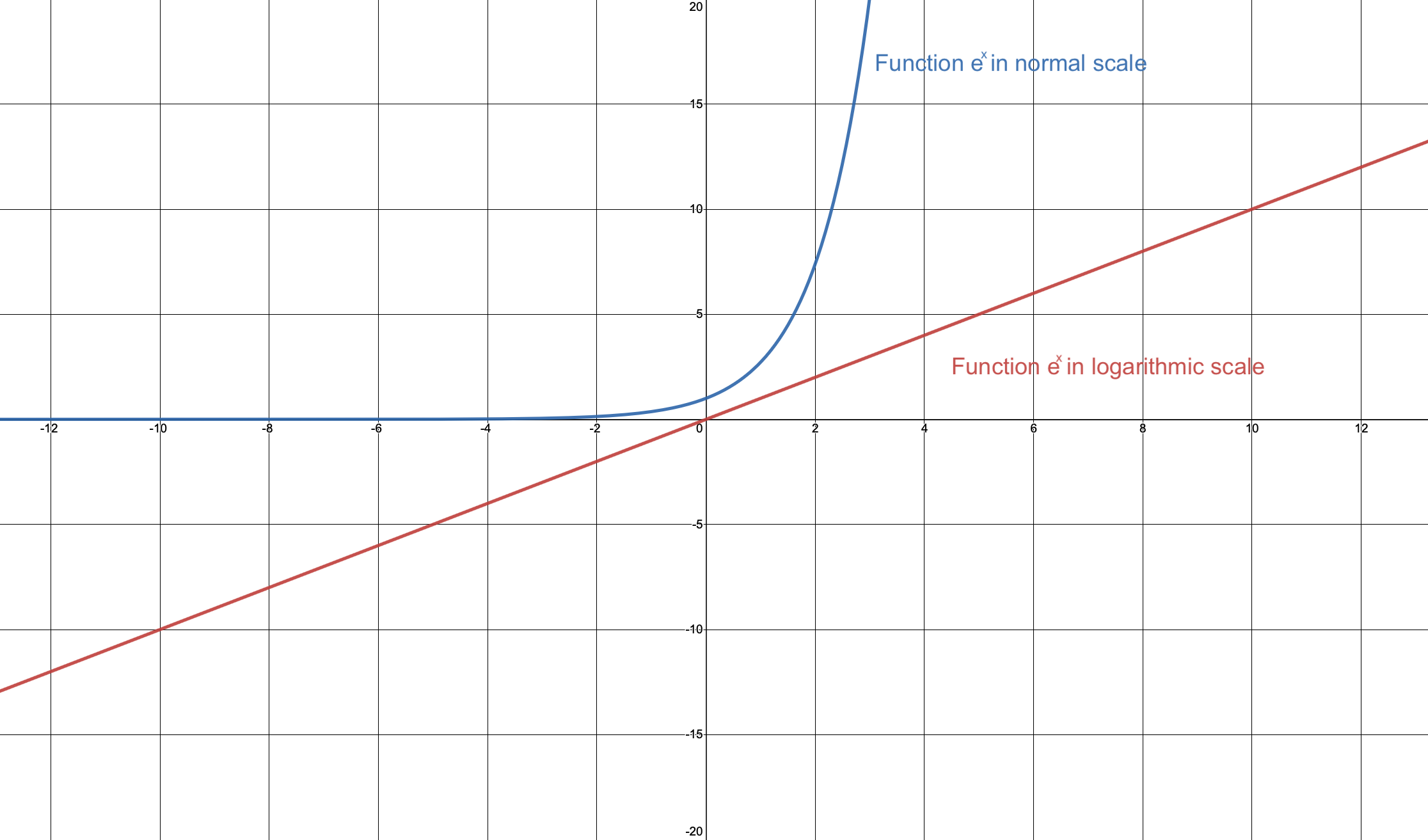

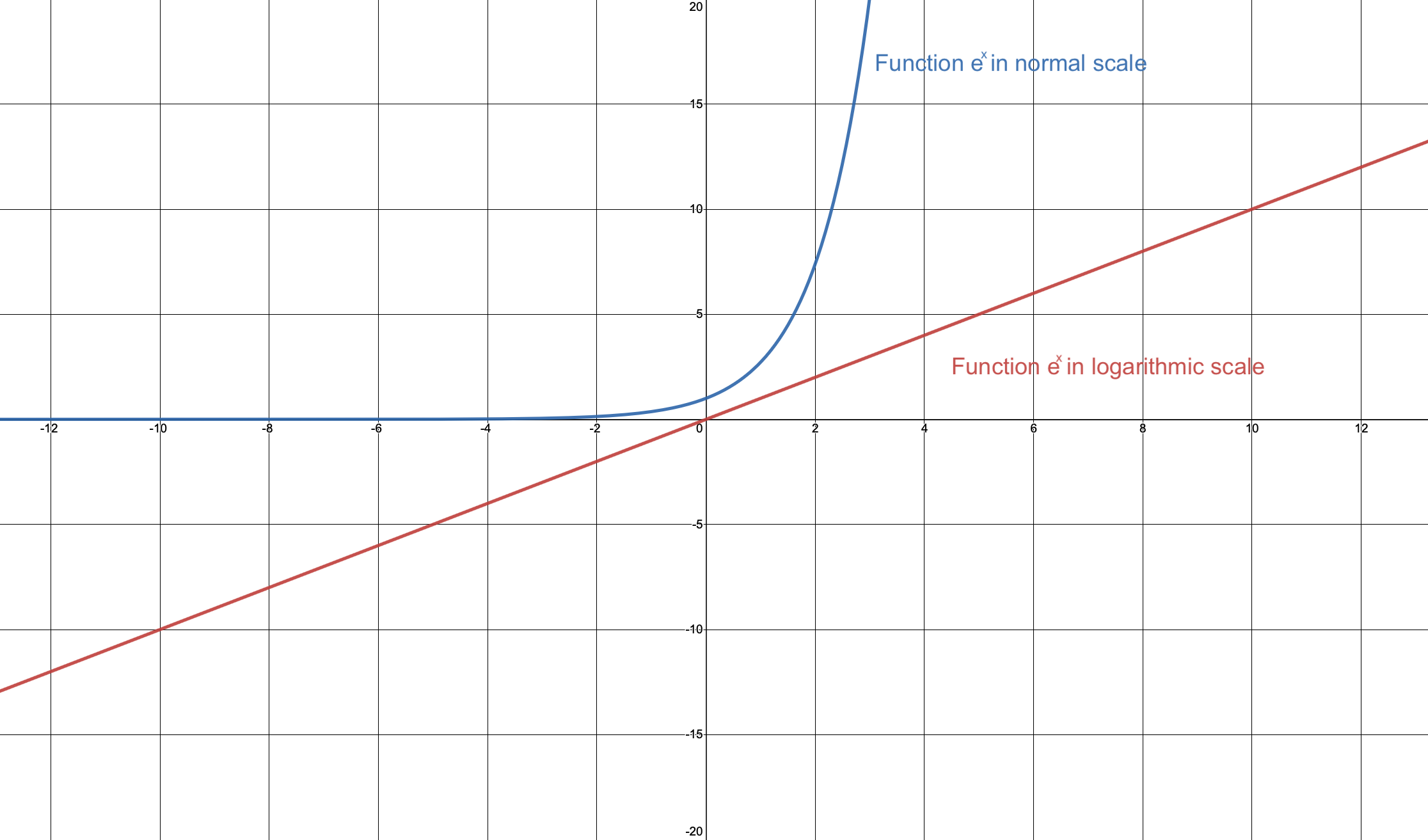

As we have seen, there are historical reasons for using a logarithmic scale when considering magnitudes: a linear quantity (magnitude) was related to a multiplicative scale (brightness). It turns out that when we consider phenomena of this type (where multiplicative effects generate big numbers), using a logarithmic scale helps to recover a linear behaviour. It also allows us to work with relatively small numbers (a kind of data ‘taming’).

There is a useful measure of the intensity of a star that does not depend on the position of the earth in space and tries to address the issue of extinction. This concept involves the use of distances to the objects under study and leads to the appearance of large numbers, which are again tamed by a logarithmic scale.

Figure 2. A comparison between linear scale and logarithmic scale.

Absolute magnitude

Apparent magnitude has a limited value due to the subjectivity associated with it. It corresponds to quantities measured by different observers and provides information about their distance to certain sources but offers limited information about the actual properties of these sources. This limitation is what leads to the definition of absolute magnitude.

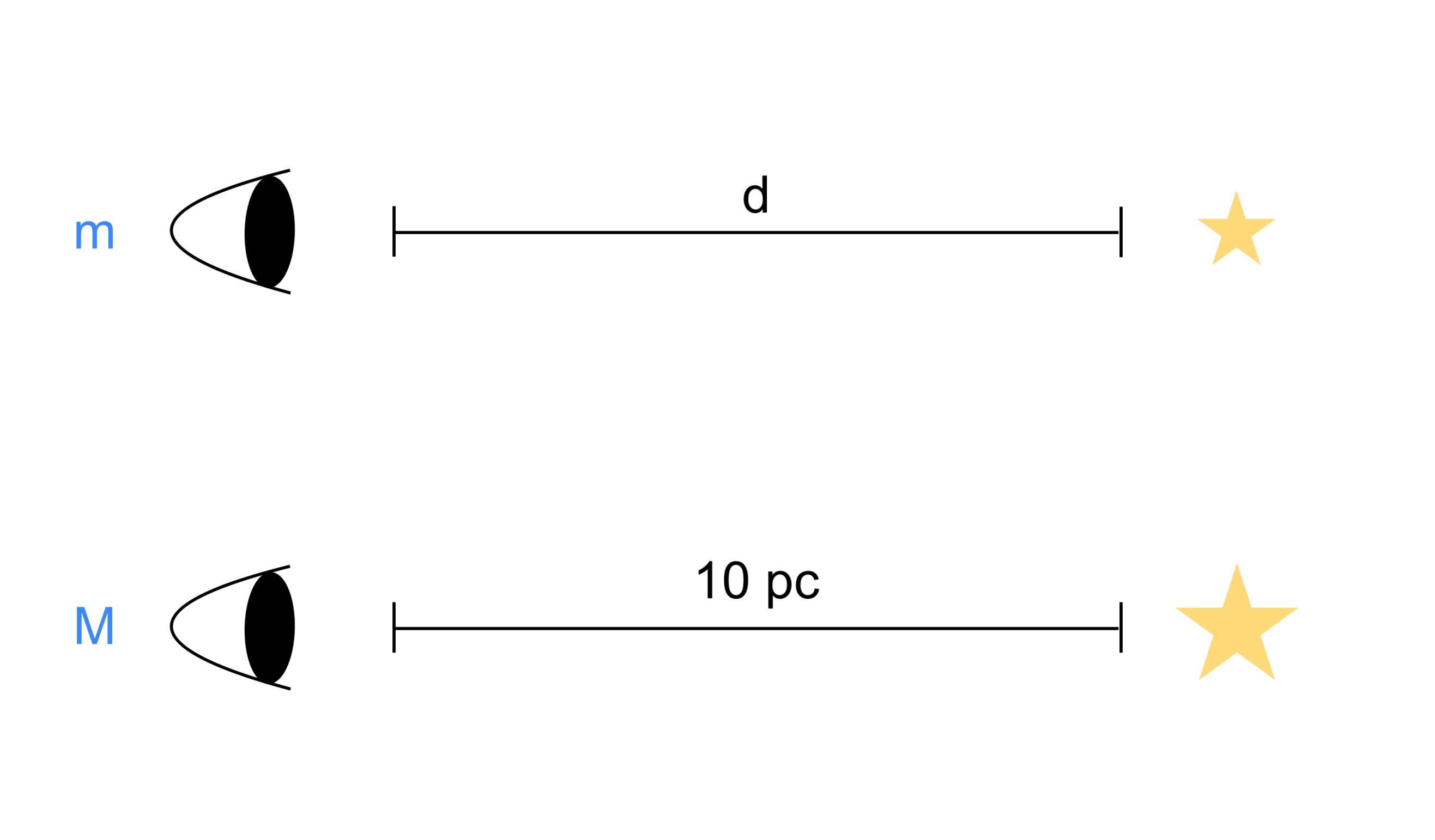

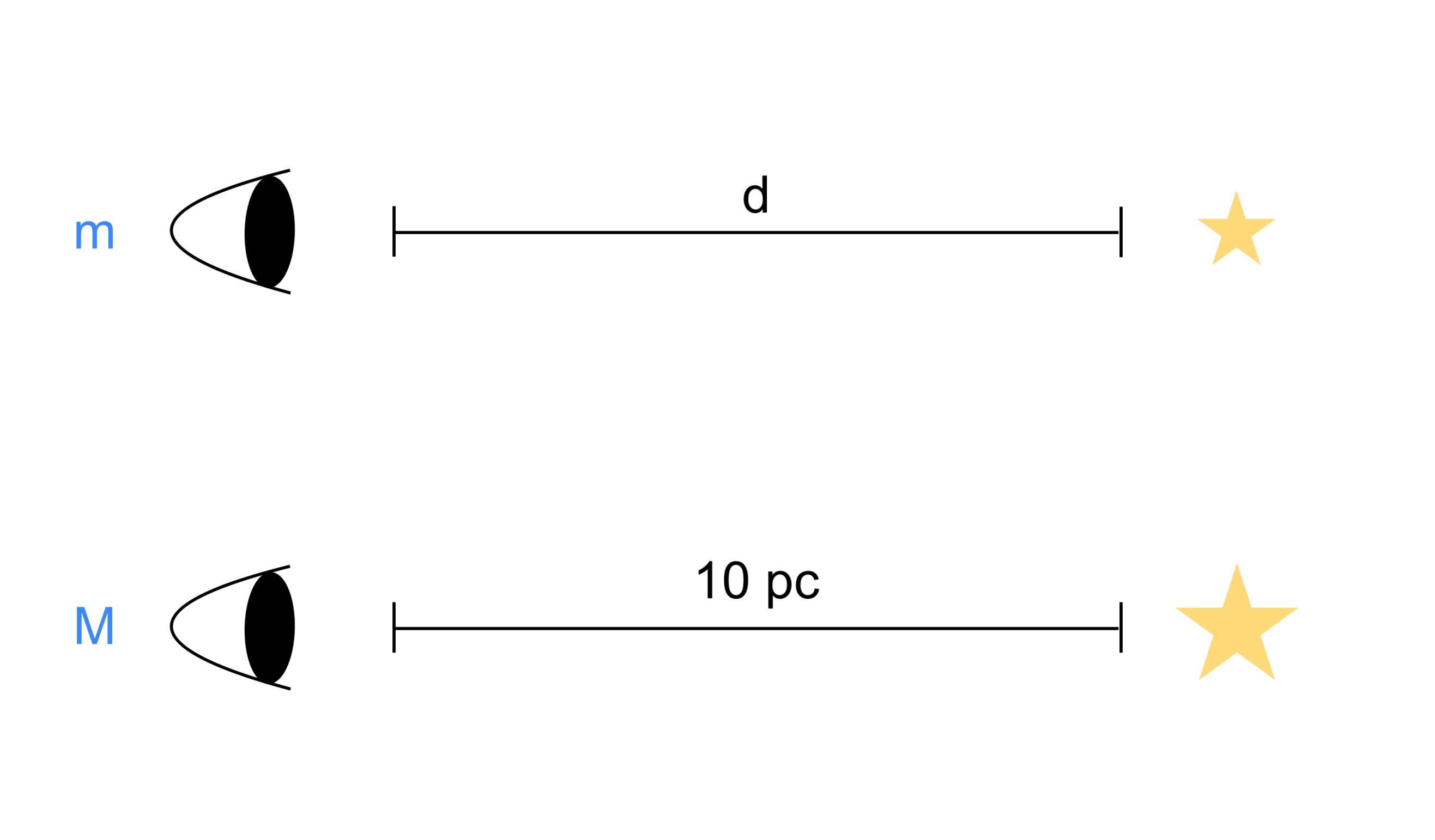

Absolute magnitude is the apparent magnitude of an object when observed from a distance of 10 parsecs. 1 parsec is equivalent to 3.09⋅1016 m, more than 200,000 times the distance between the sun and the earth.

This definition has the advantage that it is very closely related to the luminosity of stars. It measures the flux of luminosity per unit of time per the same area unit (the distance spread is the same over the same distance of observation).

However, the widely used definition does not include information about extinction factors; it only takes into account the distance to the object. Therefore, it is not a completely accurate measurement of luminosity.

The absolute magnitude formula is:

\[M = m -5 \cdot \log_{10}(d) + 5\]

Here, M is the absolute magnitude, m is the apparent magnitude, and d is the distance between the earth and the object in parsecs.

The differences between absolute and apparent magnitude

The key differences between these types of magnitudes are captured by their meanings. Apparent magnitude refers to how we see astronomical objects and, in particular, stars from the earth. Absolute magnitude refers to an actual, a-dimensional measure of the luminosity of stars and astronomical objects but, unless corrected, fails to take into account extinction factors.

Let us consider the examples of Sirius and Antares. Sirius, the brightest star in our sky, has a higher apparent magnitude than Antares, an intermediate giant star with a huge luminosity that is very far away from the earth. Their apparent magnitudes are -1.46 and 1.09, respectively.

However, their absolute magnitudes are 1.42 and -5.28, which matches the fact that Antares has a much higher luminosity.

Figure 3. An illustration of the meaning of apparent magnitude (m) and absolute magnitude (M).

Key takeaways

Luminosity is the amount of electromagnetic radiation emitted by a body per unit of time.

Since, in space, luminosity is hard to measure, we need to consider other quantities known as magnitudes.

Apparent magnitude is a logarithmic measure of the flux density of the luminosity of objects as seen from the earth.

Absolute magnitude aims to eliminate the dependence of apparent magnitude on the distance to the earth and is defined as the apparent magnitude of an object measured from 10 parsecs of it.

Both magnitudes have biases, like the distance to the earth or the absence of the correction of extinction, but are nonetheless useful quantities in astronomical studies.